User Experience is More than Just the UI

There has been a lot of ink spilt on UI/UX but remember that development's experience is often quite different than end customers. Design for the lowest spec possible, and make it acceptable

A while back, I read a blog that got me thinking. Its premise was that developers should always have the fastest, best hardware to minimize down time. Waiting 12 seconds to compile a module was too long, and encouraged the developer’s attention to stray to Reddit, or some other time suck, and then they would lose a half hour. To reduce this tendency, developers should have wicked awesome machines to reduce this tendency.

While I can sympathize with the sentiment, it is bad advice. Where I was at, they made scientific instruments. They had controllers that were essentially PC’s. They ran Windows, and they ran our software that then runs the instrument. But, these “controllers” are never leading edge computers. They were what you can source from Dell’s “stable” product line, meaning that if you pick a configuration, Dell won’t mess with it for 18 months or so, and you can count on it “just working”. We choose those computers to be fast enough, but not too expensive (because COGS is important). Beyond that, they had been selling instruments for 15+ years. In the then current generation there are a lot of customers with older systems. Think Pentium IV’s, Windows XP, and 1G RAM (if that).

Remember: your users won’t have uniform, top of the line hardware. Test usability on typical hardware and avoid support bottlenecks

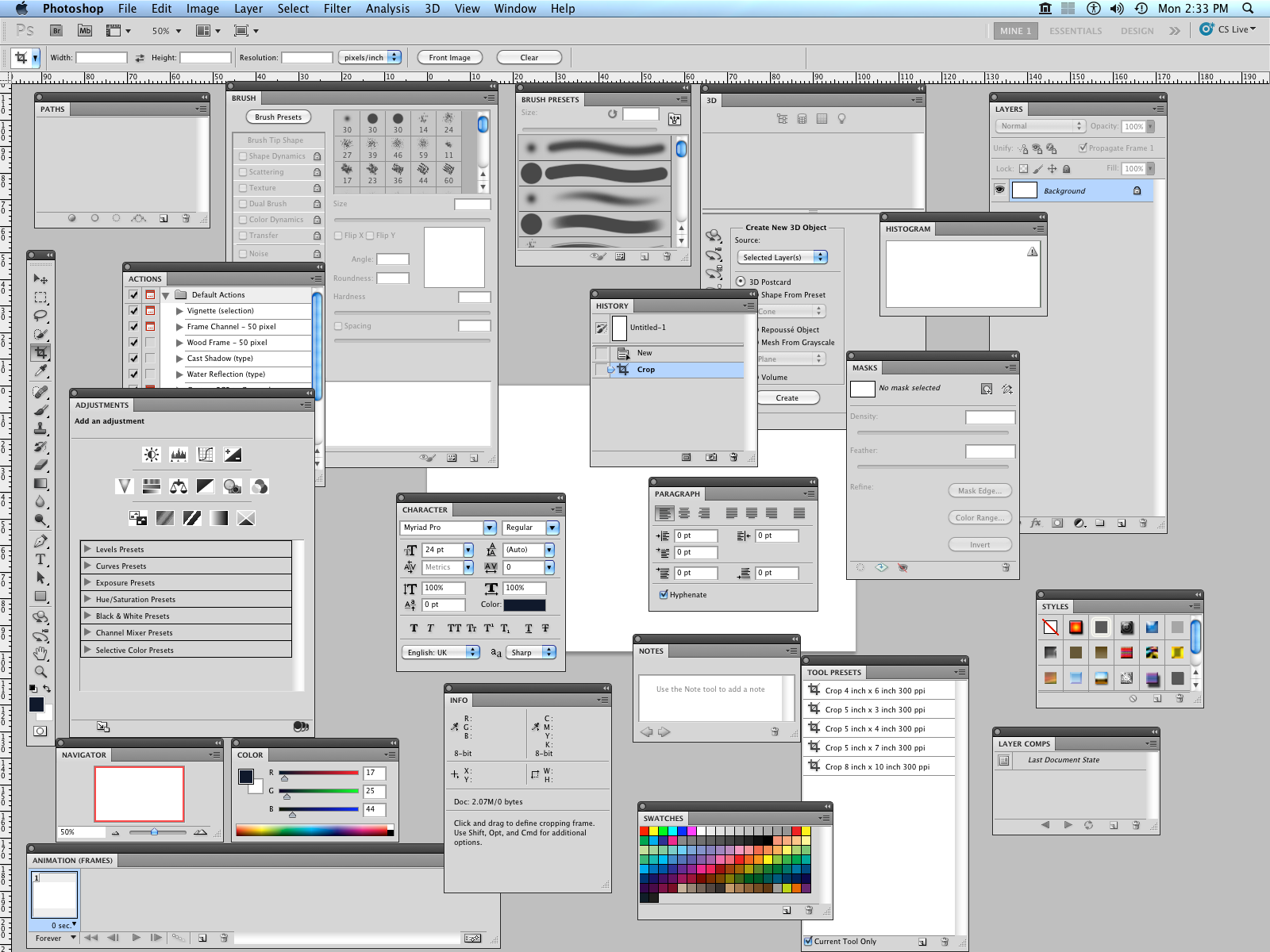

Truisms in the instrument business are that people expect support for a LONG time. These are expensive systems, and they expect, scratch that, demand 5 or more years of life. People also expect there to be perpetual free software upgrades. But what happens when your developers are using a 12 core Xeon system with 24 G ram, and a SSD raid array on their desktop?1

- All incentive to write efficient code are eliminated – Why clean up a messy class when you can just keep adding to it? Why worry about efficient data handling when you have enough memory to run 50 VM’s?

- Cleaning the cruft is never in the plan – Who cares if your executable becomes 80 or 90 megabytes? Why bother deprecating legacy? Why rewrite spaghetti legacy code when you can just wrap it and make an object class?

- You poo-poo support calls about slow or unresponsive software – of course you can’t replicate the issue, you are running on a machine that is so over kill (and so expensive it would never be specified the product) that you do not see dialog boxes that take 10 seconds to draw, or data files that crash the system because it can’t allocate enough memory, or the UI becomes non-responsive for 30 seconds at a time, because it is trying to do real time 3D manipulation of 90 gigabyte data sets.

The answer isn’t to take the fast systems away from the developers and make them suffer. But instead, you need to codify the practice of testing on two or three generations old PC’s. Also, if you ask the developers if they do any code profiling and get blank stares (yes, this happened at one stop on my career), you need to get them to look for memory, disk, and CPU bottlenecks with some of the excellent tools out there.

The surprising thing to me is how often really good developers just don’t know about how to check for, and code for, efficient use of resources in a computer. I suspect that this is due to the rapid increase in computer speed, and the reduction in costs of things like RAM, SSD’s and very fast GPU’s. I am old. Glacially old. I learned to program on the venerable PDP 11/70, in Assembly language, and on the Cyber 730 for Fortran back when you would take your object code, de-assemble it, and optimize the inside loops to maximize performance. A lost art except in the highest performance environments.

When I wrote this in 2010, this was what the developers had on their desks. Now, obviously, the specs are a lot higher ↩